GPU CLUSTER

(PARTITION)

![]()

WEKA AI-OPTIMIZING CLUSTER STORAGE

![]()

CRAY CLUSTER STOR E1000

![]()

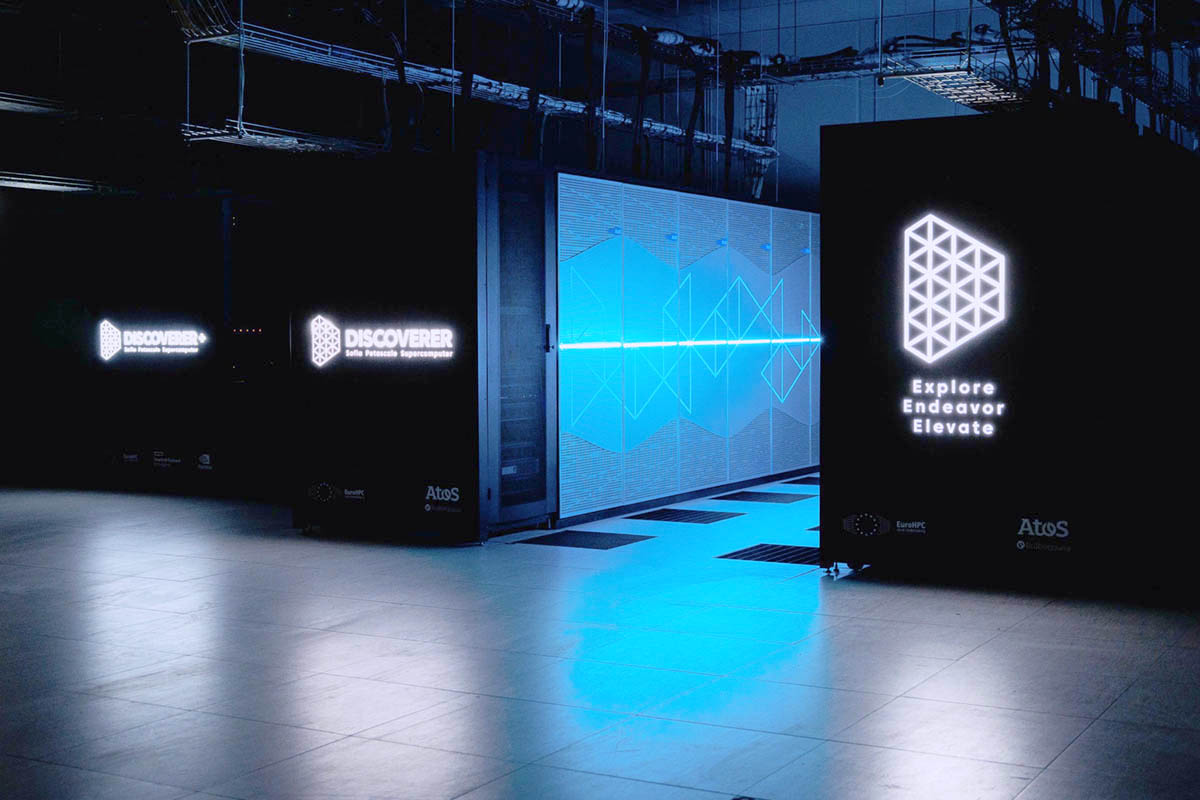

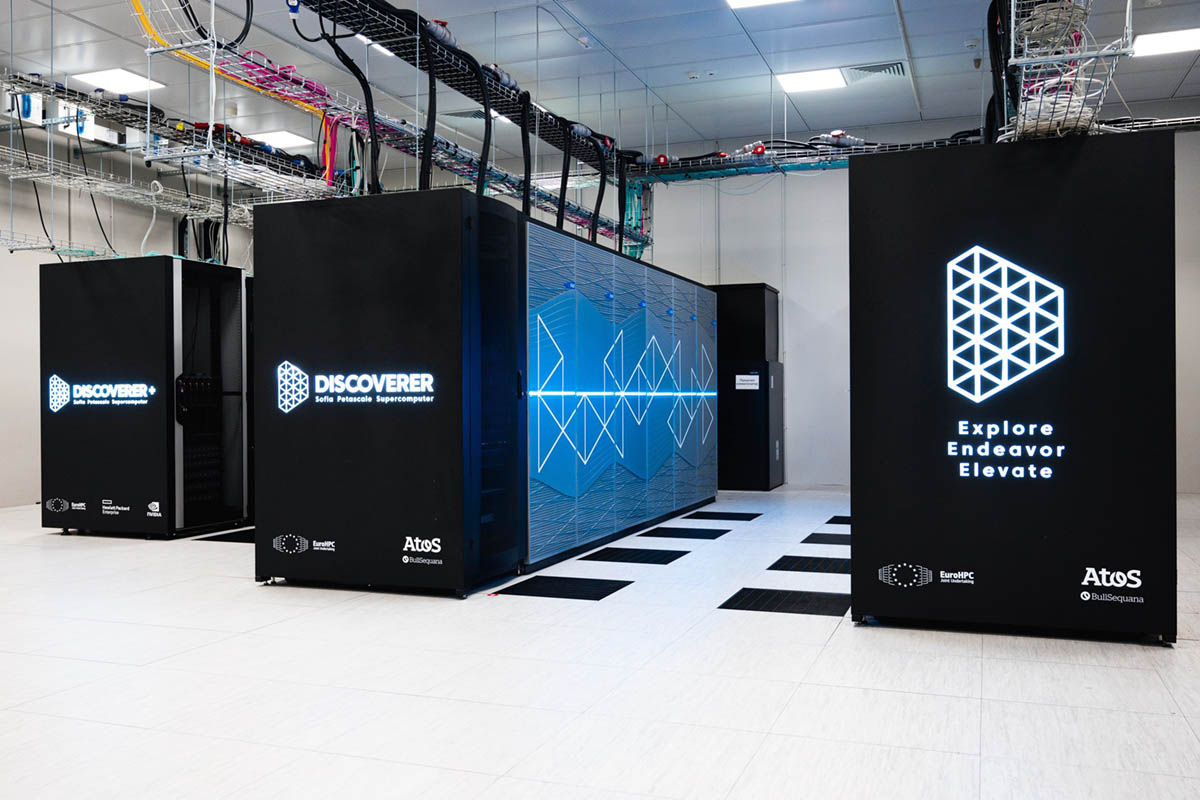

GALLERY

Aggregated Computational Resources System Performance DISCOVERER +

1. GPU CLUSTER (PARTITION):

Our newly deployed GPU cluster operates on four brand-new NVIDIA DGX H200 compute servers. Such a system can deliver unparalleled productivity that significantly increases the productivity of a large variety of artificial intelligence (AI) and high-performance computing (HPC) workflows. Combining the unique hardware features of the DGX H200 Tensor Core GPUs with the latest NVIDIA software libraries and middleware, allows performing flexible code optimization and advanced control of the computational resources. In addition, the high-speed network connectivity across the fabric, as well as the fast connection to the Internet, allow for fast data access and transfer.

As a result, our users utilize a high-productive, and easy to operate, parallel compute system that can increase the effectiveness and reduce the cost of both AI model training and inference. It can also perform complex scientific computations to support novel research in drug design and drug delivery, weather forecast, wildfires forecast, computational fluid dynamics, cryptography, network security, blockchain, finance, big data, computational and quantum chemistry, and many more.

System Specifications of The Cluster

4 × DGX H200 server systems delivered by NVIDIA

- GPU: 128 × NVIDIA H200 Tensor Core (32 per compute server)

- Total GPU Memory: 4,512GB (4.5TB per compute server)

- Total Compute Performance: 128 petaFLOPS FP8

-

CPU: 8 × Dual Intel® Xeon® Platinum 8480C Processors

- 448 Cores Total (112 per compute server)

- Base Clock (cooling is up to par): 2.00 GHz | Boost Clock: 3.80 GHz

- System Memory: 8TB (2TB per compute server)

GPU-to-GPU interconnect

4 × NVIDIA NVSwitch™ on each compute server

Provides high-speed internal (per compute node) GPU-to-GPU communication

Optimized for AI model training and scientific computing

Directly Attached Storage (per cluster):

- OS Storage: 8 × 1.92TB on NVMe M.2 SSDs

- Local Scratch Storage: 32 × 3.84TB on NVMe U.2 SSDs

- Total Accessible Storage: ~ 122TB on NVMe high-speed storage

Networking & Interconnect

High-Speed InfiniBand/Ethernet over Fabric:

- 16 × OSFP ports (32 ×x single-port NVIDIA ConnectX-7 VPI)

- 8 × dual-port QSFP112 NVIDIA ConnectX-7 VPI

- Up to 400Gb/s per link (InfiniBand/Ethernet)

Management Network

- 10Gb/s onboard NIC (RJ45 network interface)

- 100Gb/s Ethernet NIC

- Host Baseboard Management Controller (BMC) with RJ45 network interface

Connection to the Internet

- 10Gbps aggregated link to GÉANT

Software & Management

Supported Operating System:

- DGX OS / Ubuntu / RHEL / Rocky Linux

- CUDA libraries

- NVIDIA HPC SDK

- cuDNN

- NVIDIA container platform

- Python 3.13

- NVIDIA AI Enterprise (AI software stack optimization)

- NVIDIA Base Command (Cluster orchestration and management)

Preloaded AI & HPC-Optimized Software:

2. WEKA AI-OPTIMIZING CLUSTER STORAGE WITH GPUDIRECT STORAGE (GDS) SUPPORT

Key Features:

-

- High-Speed Storage: NVMe-based architecture with sub-250 microsecond latency.

- Throughput: Delivers over 80GB/s aggregate read/write throughput.

- InfiniBand Connectivity: Supports HDR 200Gb/s and EDR 100Gb/s InfiniBand for ultra-low latency and high-throughput interconnects, ensuring efficient data movement across storage nodes and compute clusters.

- Direct GPU Access & Bandwidth: Supports NVIDIA GPUDirect Storage (GDS), enabling direct data transfers from NVMe storage to GPU memory over RDMA, reducing CPU overhead and improving AI/ML training performance.

- Scalability: Supports trillions of files and up to 14 exabytes of managed capacity.

- Multi-Protocol Access: Supports POSIX, NFS, SMB, S3, and GPUDirect Storage (GDS).

- Fault Tolerance & Security: Distributed erasure coding, encryption (at-rest and in-flight), and non-disruptive upgrades.

- Optimized for AI & HPC: Designed for high-performance computing, AI training, genomics, and media workloads.

This system ensures high reliability, extreme performance, and scalability, making it ideal for AI/ML model training and inference, real-time analytics, and large-scale HPC applications.

It is connected to each NVIDIA DGX H200 compute servers based on 8 × HDR InfiniBand adapters. The Weka client is running in pulling mode on each compute node and uses (locks) 8 CPU cores.

3. CRAY CLUSTER STOR E1000 – 1 RACK SYSTEM :

System Specifications

- Total Capacity: 5.1PB

- Rack Form Factor: 1 full storage rack

- File System: Lustre (supports LDISKFS or OpenZFS as backend)

- Network Interface: Supports InfiniBand HDR/EDR, 100/200Gb Ethernet, and HPE Slingshot

- High Availability (HA): Redundant components across all subsystems

- RAID Protection: GridRAID (LDISKFS) or dRAID (OpenZFS) for data integrity and fast recovery

Key Features

1. Scalability

- Designed to support large-scale floating-point intensive workloads.

- Can expand with additional racks for increased performance and capacity.

2. High Performance

- Optimized for high floating-point computations, ensuring fast data retrieval and processing.

- Uses PCIe Gen4 NVMe SSDs for metadata and high-speed storage.

- Offers peak throughput up to 40GB/s write and 80GB/s read in full configurations.

3. InfiniBand Connectivity & Bandwidth

- InfiniBand HDR/EDR support for ultra-low latency and high bandwidth.

- Achieves 200Gb/s per connection, ensuring fast and efficient data transfers between compute nodes and storage.

- Supports multiple InfiniBand uplinks, allowing seamless scaling of storage throughput.

4. Storage Configuration

- HDD-Based SSU: Uses high-density 4U106 HDD enclosures (106x 7.2K SAS HDDs per unit).

- Data Tiering: Hybrid configurations allow SSD for high-speed access and HDD for cost-effective bulk storage.

- RAID Configurations: Uses GridRAID (LDISKFS) or dRAID (OpenZFS) to minimize data loss and maximize performance.

5. Management & Data Movement

- ClusterStor Data Services: Automates storage tiering, migration, and data lifecycle management.

- HPE DMF (Data Management Framework): Manages inter-file system data movement, archiving, and data protection.